In this example we are evaluating a bank’s ability to appropriately accept or reject loan applications. To get started a Master Underwriter went through multiple prior loan applications ahead of time and selected 10 samples. Based on her careful review, she knew that 7 of them should have been accepted and 3 of them should have been rejected. Her determination was recorded as the “true assessment”.

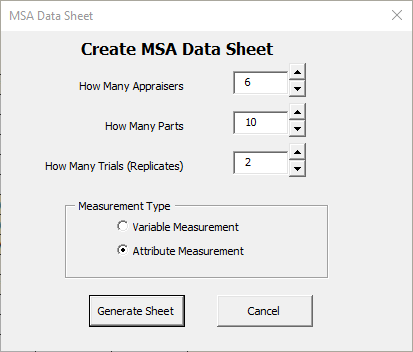

To conduct the MSA, 6 underwriters (appraisers) evaluated the 10 loan applications 2 times each. To create a template in which to record the data, click the “Measurement System Analysis” button in SuperEasyStats and fill in the fields as shown:

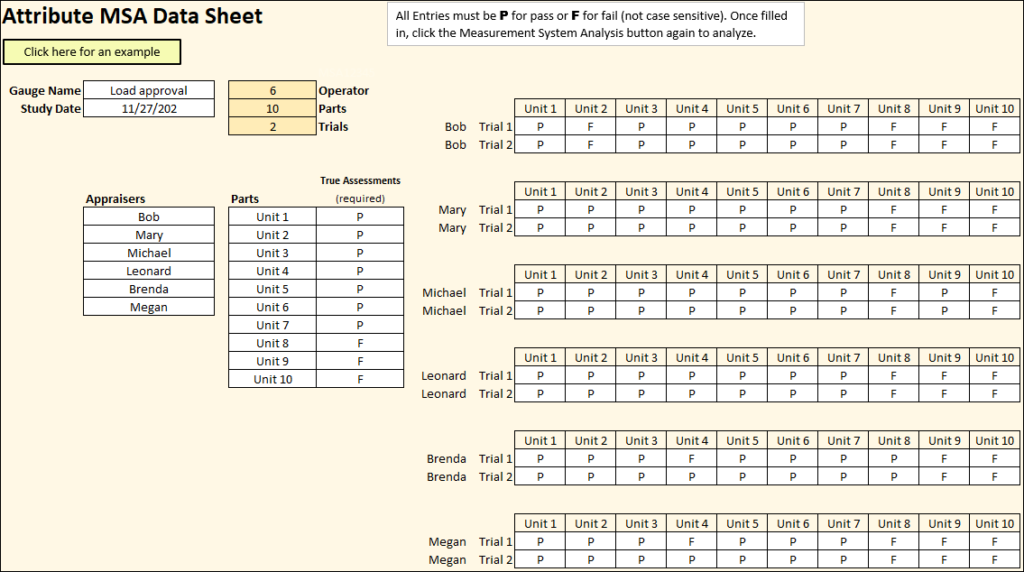

A data sheet will be generated, and you can fill in the names of the appraisers, the “true assessments” for each application (which the appraisers should not know while they are evaluating the loan applications) and, eventually, the actual assessments of the appraisers. Accepted loans were logged as a “P” (pass). Rejected loans were logged as an “F” (fail). The filled in data sheet is shown here:

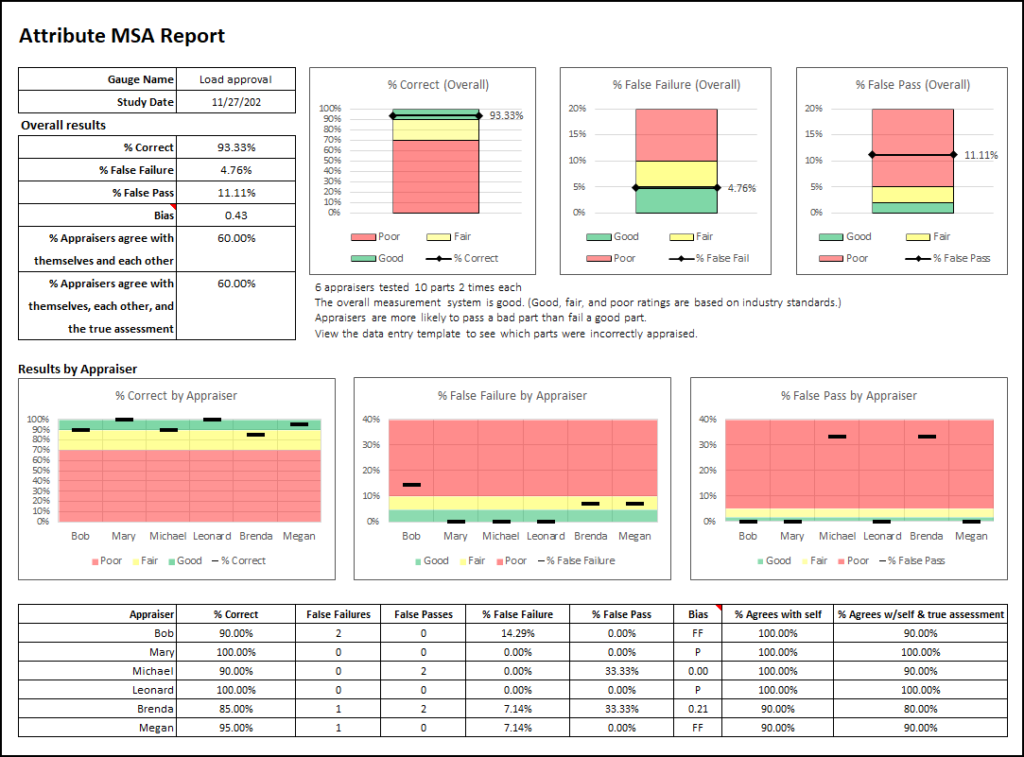

After collecting and filling in the data, clicking the “Measurement System Analysis” button again will generate the following MSA report.

The upper section summarizes the overall results (combining the scores of all appraisers). You can see that the overall % Correct is over 90%. If you look at %False Failure and %False Pass, you see that–as a group–the appraisers are more likely to incorrectly pass a loan application that should have been rejected (11.11%) than they are likely to incorrectly fail a loan application that should have passed (4.76%). There is also a summary of how often the appraisers agreed with each other and the true assessment (60% for both, in this case).

At the bottom of the report, performance by individual appraisers is broken out. The “Bias” column shows whether an appraiser is more likely to falsely pass or falsely fail an item. When a number is shown, that is simply the result of dividing %False Failures by %False Passes. When “FF” is shown, that indicates that the appraiser falsely failed one or more parts, but didn’t falsely pass any (“FF” is used instead of a number because %False Failure cannot be divided by zero). If “P” is shown, that means the appraiser was perfect in all of their assessments.

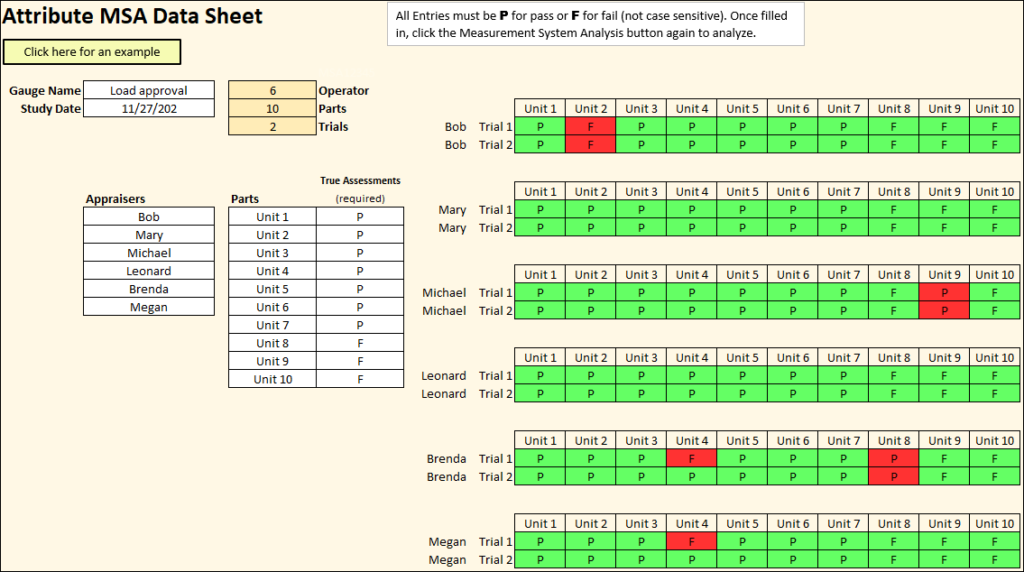

If you want to see which specific parts the appraisers assessed incorrectly, you can go back to the MSA Data Sheet. Each entry will be highlighted in green or red to show if the the appraiser’s assessment matched the true assessment:

Commonly used industry guidelines used to assess measurements systems are shown here. Of course, each specific situation might have different requirements, so you may need to adjust these guidelines to fit your specific circumstance.

Based on the guidelines:

- The Overall Effectiveness of the underwriters is 93.3%. That means the underwriters properly assessed the loans 93.3% of the time. Based on the typical rule of thumb, this is considered good.

- The % False Failure is the chance that an underwriter will reject a loan application that should have been accepted. At 4.76% this is also considered good.

- The % False Pass is the chance that an underwriter will accept a loan application that should have been rejected. At 11.11% this is considered poor.

- The Bias is calculated at 0.43. This means that Underwriters are more likely to accept a bad loan than to reject a good loan. This would put the underwriters in the poor range based on the general rules of thumb. This is especially concerning to the leadership since they have expressed that they cannot risk accepting bad loans.

- Looking deeper into the data, one can see that both Michael and Brenda were the main contributors to the false passes. Further investigation into their techniques needs to be conducted. Once improvements to this measurement system have been made, a new MSA should be conducted to verify that the underwriter team has improved.